1. Range

The range refers to the span between the minimum and maximum values that a measuring instrument can measure. This is crucial in determining the suitability of the instrument for different types of measurements in various industrial applications.

2. Measuring Range

Similar to the general range, the measuring range specifies the exact limits within which the instrument operates and provides accurate readings. It is important to distinguish between the total possible range and the range over which precise measurement can occur.

3. Span

The span is the difference between the upper and lower range limits of a measuring instrument. For instance, if an instrument measures from 0 to 100°C, its span is 100°C. Span helps to determine how much variation the instrument can handle during measurements.

4. Performance Characteristic

This term encompasses all the factors that affect an instrument’s ability to measure accurately, such as its linearity, repeatability, and response time. Understanding the performance characteristics helps users select the most appropriate instrument for their specific applications.

5. Linear Scale

In a linear scale, the increments between measurements are evenly spaced, ensuring consistent readings across the instrument’s range. Linear scales are commonly used in devices requiring precision and predictability in readings, such as in pressure gauges or thermometers.

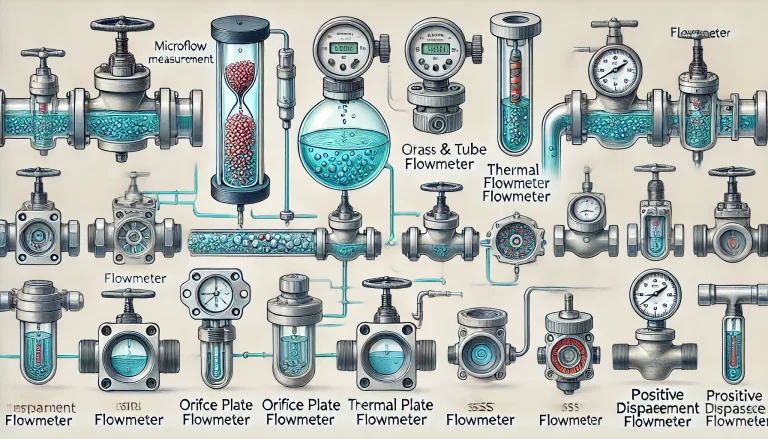

6. Nonlinear Scale

A nonlinear scale differs from a linear one in that the intervals between values are not uniform. Nonlinear scales are used when the relationship between the measurement and the output is not proportional, as seen in some types of flow meters.

7. Suppressed-Zero Scale

This scale is used when measurements do not start from zero. For example, an instrument that measures temperatures between 50°C and 100°C might suppress readings below 50°C to focus on the relevant range for the application.

8. Calibration

Calibration refers to the process of adjusting an instrument to ensure that it measures accurately. This involves comparing the instrument’s readings with a known standard and adjusting as necessary. Regular calibration is essential for maintaining the accuracy of the instrument over time.

9. Traceability

Traceability in instrumentation ensures that the measurements from a specific device can be traced back to national or international standards. This is important for maintaining consistency and reliability across different devices and measurements.

10. Sensitivity

Sensitivity is the ability of an instrument to detect small changes in the measured quantity. A highly sensitive instrument will pick up even minute variations, which is crucial in industries requiring precise control, such as pharmaceuticals or aerospace.

11. Accuracy Class

Instruments are often classified into different accuracy classes based on how closely their readings match the true value. A higher accuracy class indicates a more precise instrument, which is essential for applications where even minor deviations can have significant consequences.

12. Intrinsic Error

Intrinsic error refers to the errors inherent to the instrument itself, often due to design limitations or aging components. Understanding and accounting for intrinsic error is important for users to interpret the readings correctly and make necessary adjustments.

13. Scale

A scale refers to the graduated markings on an instrument that indicates the value of the quantity being measured. These scales can be linear, nonlinear, or logarithmic, depending on the instrument and the nature of the measurement.

14. Scale Range

The scale range specifies the interval between the minimum and maximum values that the scale on an instrument can show. This is different from the instrument’s measuring range, which is the operational range of the instrument.

15. Scale Mark

A scale mark is a specific line or symbol on the scale used to indicate specific measurement points. These marks help users accurately read the measurements.

16. Zero Scale Mark

This refers to the mark that indicates the zero point on the scale. The zero scale mark is a crucial reference for calibration and accurate readings, particularly when the instrument is used for differential measurements.

17. Scale Division

Scale division is the smallest interval between two consecutive marks on the scale. This determines the resolution of the instrument, or how finely it can distinguish between different values.

18. Value of Scale Division

This term refers to the actual value that each division on the scale represents. For example, if the scale is marked every 10 units, the value of each scale division is 10.

19. Scale Spacing

Scale spacing describes the distance between successive marks on the scale. It is designed in a way that ensures clear and easy reading of the instrument’s measurements.

20. Scale Length

The total length of the scale on the measuring instrument. A longer scale may allow for more precise readings, especially in high-resolution instruments.

21. Minimum Scale Value

The minimum value that can be read from the scale. This can be the lowest possible reading an instrument can measure, or a value just above zero if a suppressed-zero scale is used.

22. Maximum Scale Value

Conversely, the maximum scale value is the highest value that the scale can measure. Instruments are selected based on whether their maximum scale value is suitable for the application.

23. Scale Numbering

Scale numbering refers to how numbers are arranged on the scale. They can be arranged incrementally or in a more complex sequence depending on the measurement type.

24. Zero of a Measuring Instrument

This is the point on the instrument’s scale that represents a value of zero in the measurement. Ensuring that the zero point is correctly calibrated is essential for accurate measurements.

25. Instrument Constant

An instrument constant is a value specific to a given measuring device that relates its output to the actual measured quantity. It is often used in calculations to convert readings into the desired units.

26. Characteristic Curve

The characteristic curve of an instrument is a graph that shows the relationship between the input and output of the instrument over its range of operation. Understanding this curve helps users know how the instrument will behave under different conditions.

27. Specified Characteristic Curve

This is the ideal or expected performance curve provided by the manufacturer of the instrument. It is used as a standard for comparing actual performance during calibration or testing.

28. Adjustment

Adjustment refers to the process of modifying an instrument to align its performance with the required standards. It is typically done during calibration or maintenance to ensure accurate and reliable readings.

29. Calibration

Calibration is a process where an instrument is tested and adjusted to provide measurements that conform to established standards. It ensures that the instrument’s readings are accurate within a specified tolerance. Calibration is critical in ensuring consistent and reliable data across different measuring tools and environments.

30. Calibration Curve

A calibration curve is a graph that shows the relationship between the measured output of an instrument and the known values of the input over a range. This curve is used to correct any deviations from the expected behavior of the instrument.

31. Calibration Cycle

The calibration cycle is the interval of time between successive calibrations of an instrument. Regular calibration cycles are necessary to maintain accuracy and ensure the instrument remains within operational standards.

32. Calibration Table

A calibration table lists the correction values needed for different points on the scale of an instrument. This table allows users to make manual corrections when interpreting measurements.

33. Traceability

Traceability ensures that the measurement can be compared to international or national standards. It establishes a chain of calibration linking the instrument to those standards, ensuring accuracy and reliability across different instruments and industries.

34. Sensitivity

Sensitivity describes how responsive an instrument is to changes in the quantity being measured. A more sensitive instrument can detect smaller changes in the input, making it suitable for applications where precision is critical.

35. Accuracy

Accuracy refers to how close a measurement is to the true value. High accuracy is essential in many industries, such as pharmaceuticals, aviation, and manufacturing, where precise measurements can affect safety, quality, and performance.

36. Accuracy Class

Instruments are grouped into different accuracy classes based on their precision. Instruments in a higher accuracy class provide measurements that are closer to the true value, making them suitable for applications requiring a high degree of precision.

37. Limits of Error

This term defines the acceptable range within which the instrument’s readings may deviate from the true value. Limits of error are crucial for understanding the reliability and usability of an instrument in a given application.

38. Intrinsic Error

Intrinsic error refers to the errors inherent in the instrument itself, without considering external factors. These errors might be due to the design, materials used, or the physical limits of the measuring device.

39. Conformity

Conformity indicates how closely an instrument’s readings align with a specific standard or expected behavior. Nonconformity indicates deviations from expected performance and might require calibration or adjustment.

40. Independent Conformity

Independent conformity refers to how well an instrument conforms to standards or specifications without being influenced by external factors. This is essential in high-precision measurements where consistency is key.

Understanding these terms is vital for anyone working in industries that rely on precise measurements, such as engineering, manufacturing, and research. By knowing how to interpret and apply these concepts, professionals can ensure they are using the right tools for the job and maintaining the accuracy and reliability of their measurements.