Ultrasonic flaw detectors are indispensable tools in modern non-destructive testing (NDT), widely used in industries such as manufacturing, construction, and aerospace. These instruments rely on the propagation characteristics of ultrasonic waves within materials to detect internal defects. Over time, environmental factors and frequent use can lead to drift in performance parameters. As a result, regular metrological calibration is essential to ensure accurate and reliable detection results.

1. How Ultrasonic Flaw Detectors Work

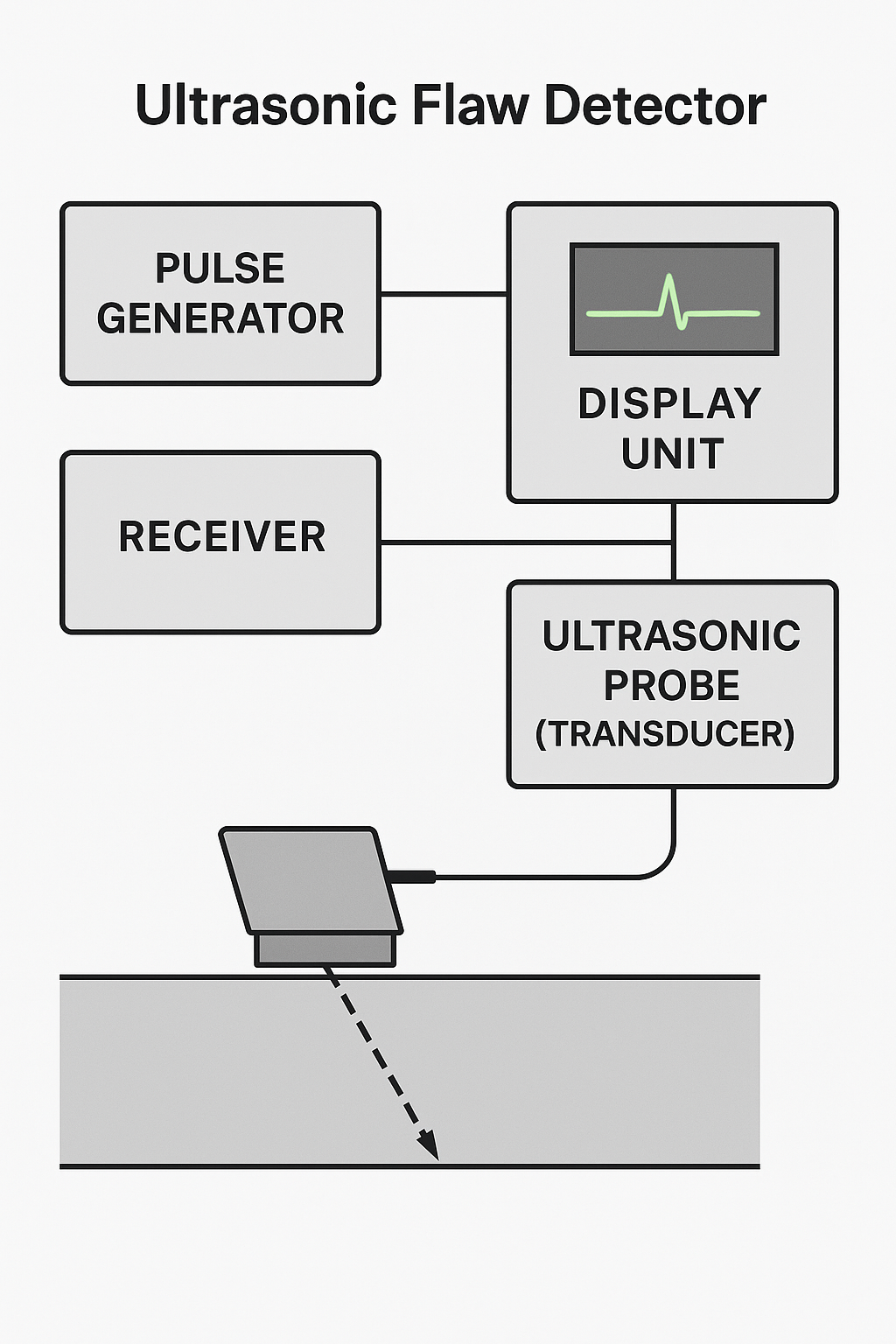

Ultrasonic flaw detectors use a piezoelectric transducer to generate high-frequency sound waves. When these waves encounter internal defects or boundaries within a material, they reflect back to the surface. The instrument then receives and analyzes these echo signals to determine the location, size, and nature of the defect.

A typical flaw detection system consists of:

Pulse generator

Receiver

Display unit

Ultrasonic probe (transducer)

Different types of probes (frequencies, angles, contact types) are selected depending on the testing requirements to ensure optimal detection sensitivity and resolution.

2. Basic Concepts and Calibration Standards

Calibration refers to the standardized process of comparing the instrument’s measurement output with known reference values to evaluate its accuracy and reliability.

Key calibration standards include:

ISO 22232 series (international)

JJG 746-2004 (China metrology verification regulation)

These standards define:

Environmental conditions

Calibration equipment requirements

Calibration parameters and allowable errors

They serve as authoritative references for ensuring traceable and standardized calibration procedures.

3. Key Calibration Parameters for Ultrasonic Flaw Detectors

The following parameters are essential when calibrating an ultrasonic flaw detector:

| Parameter | Description |

|---|---|

| Sensitivity | Ability to detect small defects, often indicated by the minimum detectable flaw size. |

| Resolution | Ability to distinguish between two closely spaced flaws. |

| Linearity | Accuracy of amplitude and time measurements across the full range. |

| Time Base Linearity | Ensures correct distance or depth measurement by verifying uniform time intervals. |

| Vertical Linearity | Assesses the proportionality between echo amplitude and signal input. |

| Noise Level | Background signal interference that may affect flaw detection. |

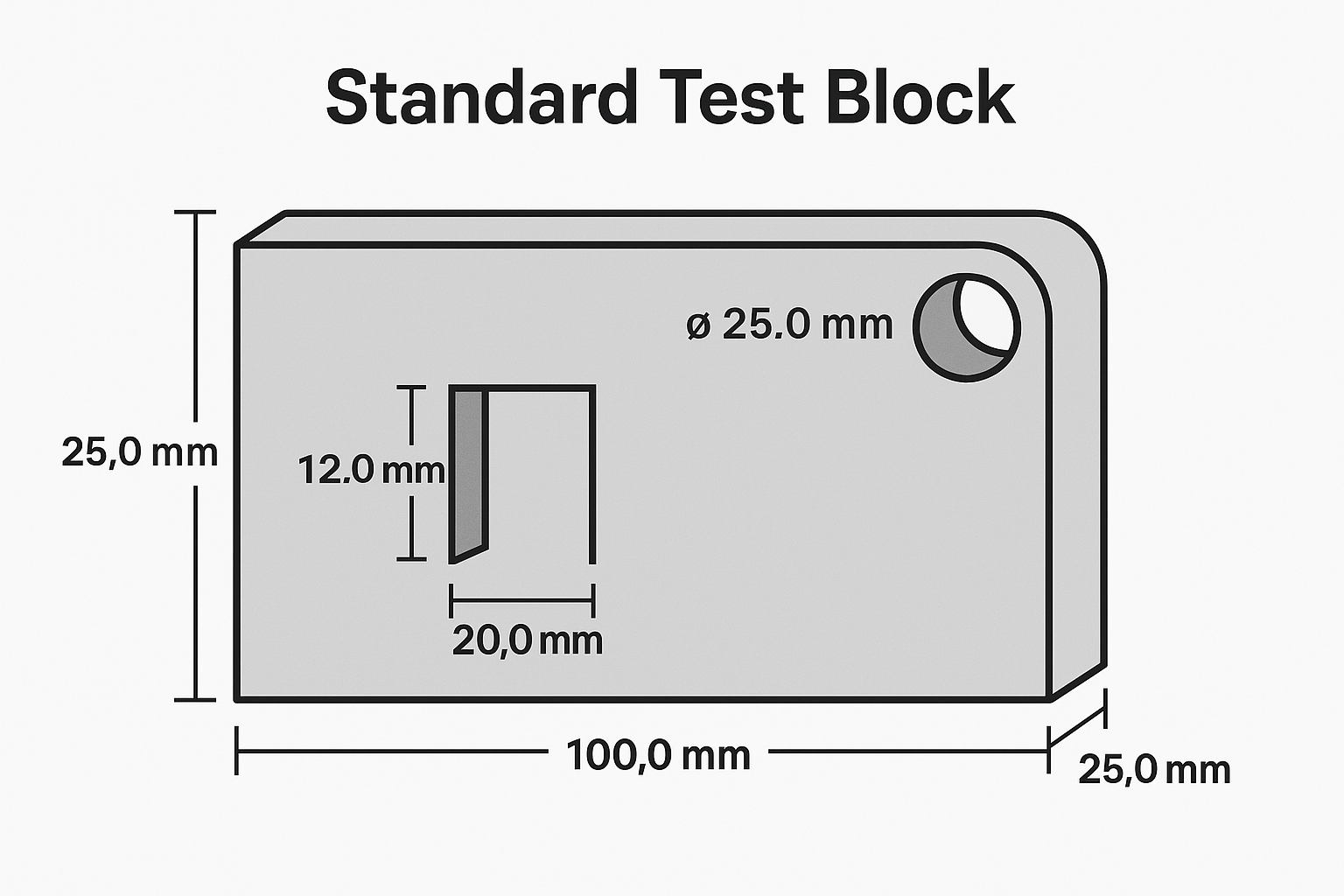

Calibration is performed using reference blocks or standardized test blocks (e.g., IIW blocks), ensuring each parameter meets tolerance limits.

4. Step-by-Step Calibration Procedure

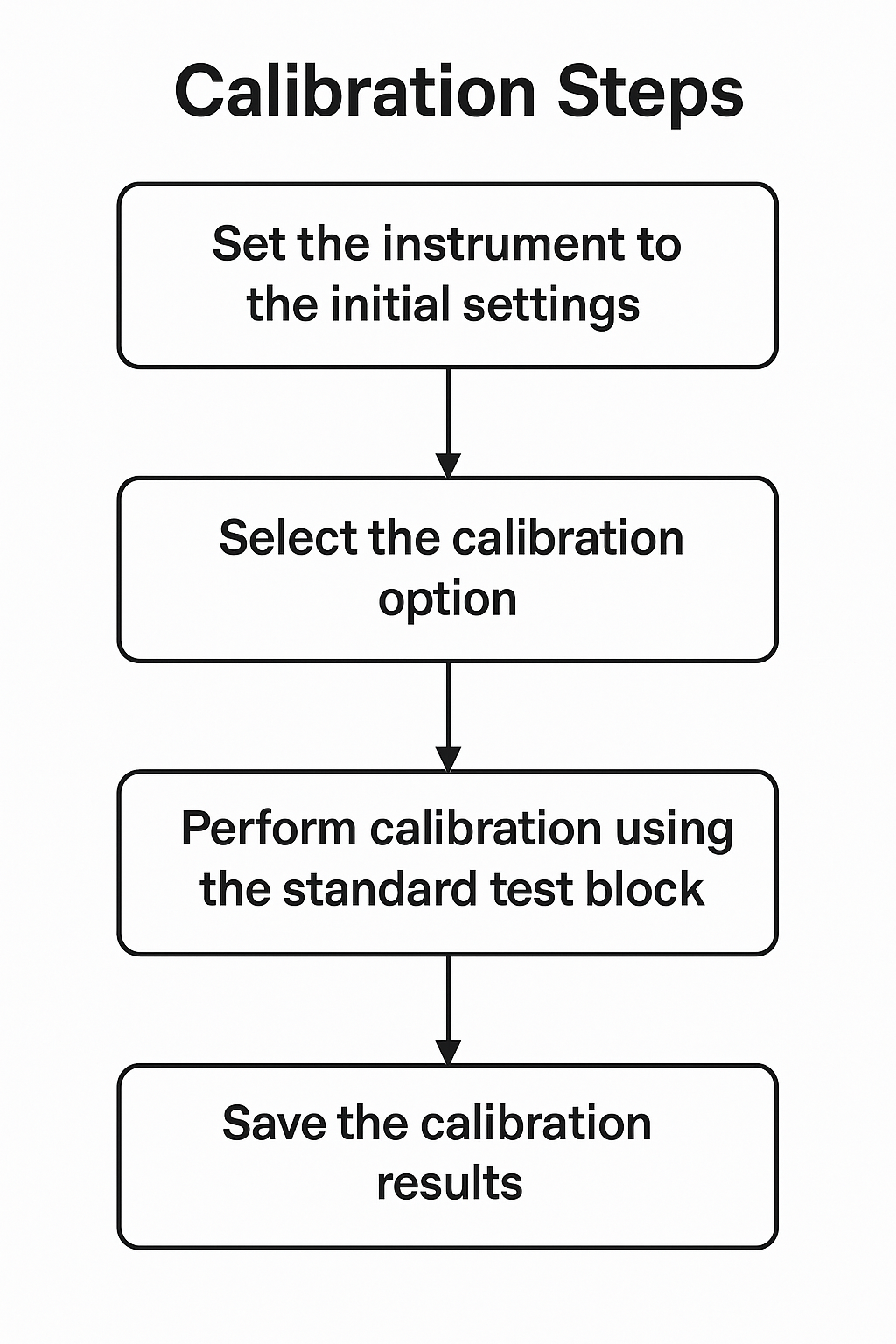

A standardized calibration process typically includes:

🔧 Step 1: Preparation

Ensure calibration room meets temperature and humidity requirements

Inspect the instrument’s appearance and check power supply

🧪 Step 2: Functional Check

Verify that all function buttons, screen, and display features operate normally

📏 Step 3: Performance Calibration

Use standard test blocks to adjust and verify key parameters

Ensure measurement accuracy and repeatability

📑 Step 4: Documentation

Record calibration data and generate a calibration certificate/report

Identify any issues found and apply necessary adjustments

It is critical that the procedure follows traceable calibration standards and is executed by qualified personnel.

5. Common Calibration Issues and Troubleshooting

| Issue | Possible Cause | Suggested Action |

|---|---|---|

| Low Sensitivity | Worn probe, loose connection, incorrect gain setting | Check probe wear, re-tighten connectors, reconfigure gain |

| High Noise Level | Poor grounding, power interference | Improve shielding, ensure proper grounding |

| Incorrect Time Base | Drifting internal clock | Recalibrate using certified time base reference |

Other influencing factors include:

Temperature fluctuations

Probe aging

Degradation of electronic components

Establishing a preventive maintenance and calibration plan can minimize these issues and extend instrument lifespan.

6. Conclusion and Recommendations

Regular metrological calibration of ultrasonic flaw detectors is vital for ensuring detection reliability and data accuracy. A proper calibration program helps:

✅ Detect performance drift early

✅ Improve product quality control

✅ Support compliance with industry and safety standards

With advances in automation and digital diagnostics, future calibration practices may include AI-driven or automated calibration platforms.

🔍 Final Suggestions:

Perform calibration every 6 to 12 months, or more frequently under harsh conditions

Use certified calibration blocks and traceable standards

Keep detailed calibration records and certificates

Partner with qualified metrology laboratories

By doing so, you ensure the safety, reliability, and efficiency of your inspection processes.