Sensitivity in Instrumentation

Sensitivity of an instrument is defined as its ability to detect small changes in the input quantity, i.e., how responsive an instrument is to changes in the input. Mathematically, it is represented as the ratio of the change in output to the corresponding change in input. High sensitivity means that even small changes in the measured quantity produce a noticeable change in the output, while low sensitivity indicates that larger changes in the input are needed to alter the output.

How Sensitivity Works

Sensitivity is determined by the design and the purpose of the instrument. For instance, a thermometer with a high sensitivity can detect minor temperature fluctuations, which is useful in situations where small changes are critical, such as in laboratories or medical equipment.

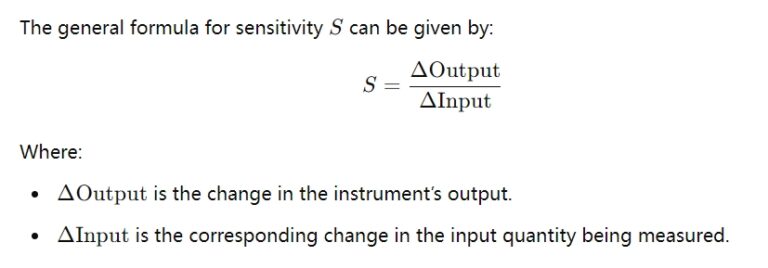

Sensitivity Formula:

Example: Consider a temperature sensor with a sensitivity of 0.5 V/°C. This means for every 1°C change in temperature, the sensor’s output will change by 0.5 volts. This level of sensitivity allows the instrument to detect minor temperature changes, making it valuable in environments where precise temperature control is essential.

Factors Affecting Sensitivity

Sensitivity can vary based on the instrument’s components, calibration, and environmental conditions. Factors such as noise, interference, and drift can affect sensitivity and might require periodic recalibration to maintain accuracy.

Types of Sensitivity

- Absolute Sensitivity: Refers to the sensitivity of the instrument without considering any offset or baseline level.

- Relative Sensitivity: Takes into account the baseline or offset, which can sometimes affect how changes in input translate to changes in output.

Resolution in Instrumentation

Resolution is the smallest detectable increment of measurement an instrument can reliably display. It refers to the fineness of the measurement that the instrument can distinguish. Resolution is particularly important in applications where small changes in the measured quantity need to be detected and displayed accurately.

How Resolution Works

Resolution is defined by the smallest change in the input that can be discerned by the instrument. For example, if a digital scale has a resolution of 1 gram, it can measure weight in increments of 1 gram. A higher resolution, such as 0.01 grams, would allow for more detailed measurements but might also increase the susceptibility to noise and environmental factors.

Resolution Example:

Imagine a high-resolution digital caliper used in manufacturing. If the caliper has a resolution of 0.001 mm, it means that it can detect dimensional changes down to 0.001 mm. This high resolution is necessary in precision engineering, where even minute variations can impact the performance and quality of the final product.

Factors Affecting Resolution

Resolution is often determined by the instrument’s design and the quality of its components. Digital instruments, for instance, often have limited resolution due to the discrete steps available in their analog-to-digital converters (ADCs). Additionally, environmental conditions, such as vibrations and temperature, can limit the effective resolution of sensitive instruments.

Types of Resolution

- Spatial Resolution: Relevant in imaging or positional measurement, such as in cameras or scanners.

- Temporal Resolution: Refers to the minimum time interval the instrument can detect or differentiate between two events.

- Amplitude Resolution: Pertains to the smallest difference in amplitude (like voltage or temperature) an instrument can detect.

Sensitivity vs. Resolution: Key Differences and Interplay

While sensitivity and resolution are related, they describe different aspects of an instrument’s performance.

- Sensitivity is about the instrument’s responsiveness to changes in the measured quantity. High sensitivity means the instrument reacts more to small changes in input.

- Resolution is about the instrument’s ability to distinguish between small differences in measurements. High resolution allows the instrument to display very fine increments.

Example to Illustrate Both Concepts

Consider a digital thermometer with high sensitivity but low resolution. This thermometer might react quickly to a temperature change of 0.1°C, but if its resolution is only 1°C, it will display temperature in whole degrees only. Hence, it might detect the change, but not represent it accurately on the display.

Conversely, an instrument with high resolution but low sensitivity might accurately display tiny increments but fail to react to subtle changes in the measured quantity, especially if those changes are below the instrument’s threshold of detection.

Practical Importance of Sensitivity and Resolution

- In Medicine: High sensitivity and resolution are critical in devices like electrocardiograms (ECGs) or blood glucose monitors, where minute physiological changes need to be detected and displayed accurately.

- In Engineering: Precision instruments used in manufacturing, such as micrometers or laser measurement tools, require both high sensitivity and high resolution to ensure parts meet exact specifications.

- In Environmental Monitoring: Instruments used to measure air quality or pollution levels often require high sensitivity to detect low concentrations of pollutants, while high resolution enables differentiation between very small concentration changes.

Balancing Sensitivity and Resolution

Designing an instrument often involves trade-offs between sensitivity and resolution. High sensitivity can make an instrument prone to noise, while high resolution can increase cost and complexity. Therefore, designers need to balance these factors based on the intended application, ensuring that the instrument provides both accurate and practical measurements.

Summary

In summary, sensitivity and resolution are crucial attributes that define an instrument’s ability to accurately and precisely measure and represent data:

- Sensitivity is the instrument’s responsiveness to changes in the input.

- Resolution is the smallest detectable change that the instrument can differentiate.

Understanding these concepts and how they interact allows engineers, scientists, and professionals to select and design instruments tailored to specific measurement needs.