1. Introduction

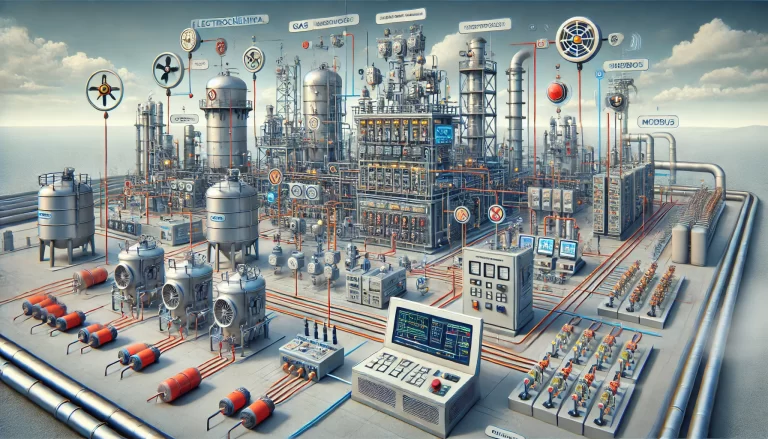

In chemical, petrochemical, and power industries, the Distributed Control System (DCS) acts as the central nervous system of the plant. It monitors process parameters, executes interlocks, and ensures stable operations.

A DCS failure often means loss of process control: from partial shutdowns to plant-wide outages, or even major safety incidents.

For this reason, operators, instrument technicians, and engineers must understand:

The working principles of DCS,

Common failure scenarios, and

Effective emergency handling measures.

This document combines principle explanations, scenario-based cases, and step-by-step operational guidelines to enhance both understanding and practical response capability.

2. What Is a DCS Failure?

Although modern DCS systems feature redundancy (dual power supply, dual controllers, dual networks), failures still occur due to several causes:

2.1 Communication Network Failure

Description: The “blood vessels” of the system. If blocked, operator stations lose contact with field devices.

Typical causes: Loose cable connectors, fiber optic moisture ingress, cooling fan failure in switches.

Analogy: Like blood circulation disorder causing numbness or unconsciousness.

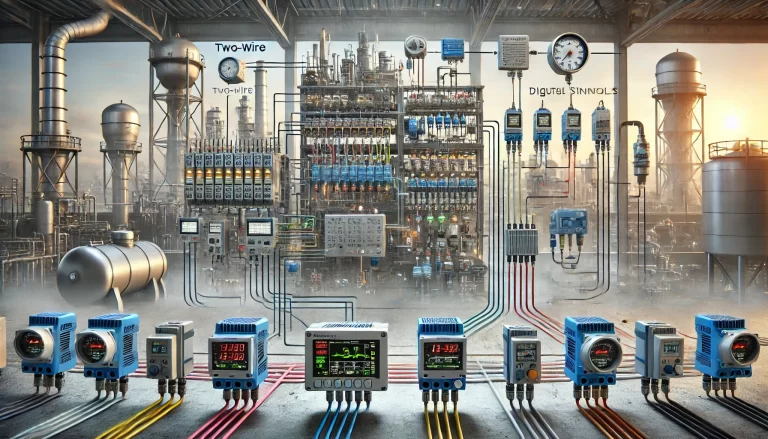

2.2 Controller or I/O Module Failure

Controllers = the “brain”.

I/O modules = the “nerve endings” linking sensors and actuators.

Failure leads to either unprocessed signals or inoperable control valves/pumps.

2.3 Power Supply Failure

DCS relies on UPS (Uninterruptible Power Supply).

Risks: Aged batteries, failed switchover, or complete UPS shutdown.

Note: UPS is often a “silent killer” because it remains unnoticed until failure occurs.

2.4 Operator Station Failure

The “cockpit” of operations.

If frozen or crashed, base-layer control still runs, but operators cannot send commands.

3. Failure Symptoms and Field Observations

When a DCS failure occurs, several signs can be observed:

Alarms: Audible/visual alerts with fault messages.

Frozen screens: Parameters not updating.

Interlock failures: Key equipment not shutting down when exceeding limits.

Process fallback: Valves revert to fail-safe states (FC valves → closed; FO valves → open).

👉 Practical Tip: Operators should immediately confirm valve positions and pump status physically, instead of relying only on the screen.

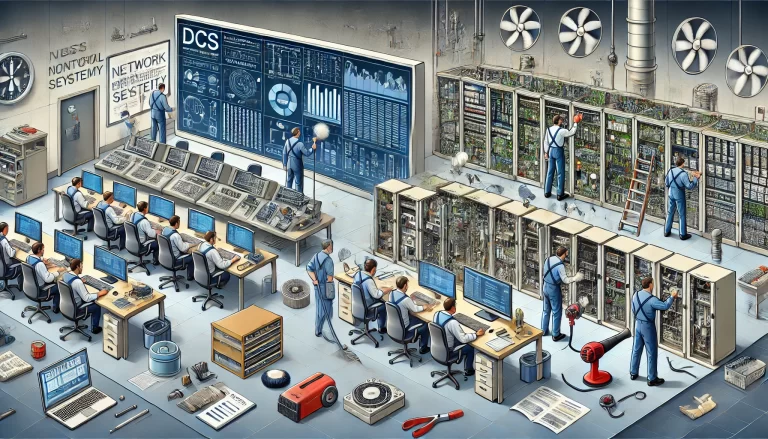

4. Emergency Role Allocation

A successful response requires clear division of responsibilities:

| Role | Responsibility |

|---|---|

| Workshop Manager | Incident commander: coordination, resource allocation, root-cause tracking. |

| Technician | Rush to site with tools/spares, identify problem sources. |

| DCS Maintenance | Switch controllers, replace modules, troubleshoot in control room and field. |

| Operators | Adjust process manually, switch valves/pumps to manual control if needed. |

5. Practical Emergency Workflow (7 Steps)

Rapid Reporting: Notify workshop manager within 5 minutes.

Team Action:

Control Room Team: Diagnose system-wide issues.

Field Team: Verify equipment conditions.

Technical Briefing: Define safe vs. unsafe operations.

Process Adjustment: Prepare manual or bypass operation.

Fault Diagnosis:

Network: Inspect cables, switches, perform ping tests.

Controllers: Check redundancy sync status.

Power: Verify UPS mode and logs.

Equipment Replacement: Replace modules with ESD protection.

System Recovery: Power restoration → Server startup → Gradual operator station reboot.

6. Typical Scenarios and Response

| Scenario | Symptom | Response Action |

|---|---|---|

| Network Interruption | Frozen screens, no data updates | Check switch power/fan; test network via laptop. |

| Controller Failure | Main/backup not synchronized | Restart backup controller; if both fail → prepare shutdown. |

| UPS Failure | Blackout, system offline | Check bypass mode; verify battery; immediately back up DCS configuration files. |

| Operator Station Crash | Single station unresponsive | Restart station; if unresolved, switch to backup station; check DB integrity. |

7. Power Restoration Best Practices

Different vendors show different recovery behavior:

| Vendor/System | Post-Power-Loss Behavior |

|---|---|

| Honeywell PKS | Valves re-initialize (FC closed, FO open). Manual confirmation required. |

| Triconex | Battery retains program; if failed, re-download program required. |

| HollySys | Valves go to fail-safe state; may need manual re-initialization. |

| Siemens | Usually auto-restores; if not, reload hardware configuration. |

👉 Tip: Never start all operator stations at once. Boot one first to balance system load.

8. Field Experience and Best Practices

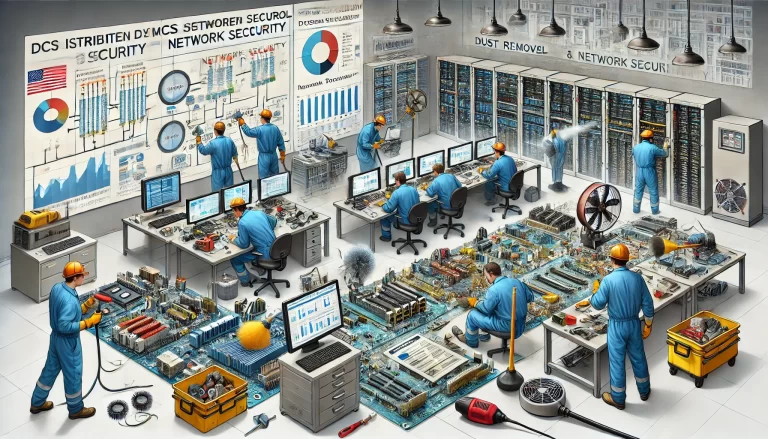

8.1 Inspection Points

Regular UPS battery discharge tests.

Switch cleaning and cooling checks.

8.2 Common Pitfalls

Loose RJ45 connectors — frequently overlooked root cause.

Inserting/removing modules too quickly — risk of secondary damage.

8.3 Drill & Training

Quarterly emergency drills, especially total blackout → manual mode switchover.

8.4 Toolbox Essentials

Anti-static wrist strap, spare network cables, fiber tester, UPS bypass card.

9. Conclusion

The DCS is the nervous system of a plant — essential yet fragile.

Real operational capability lies not in memorizing thick manuals, but in:

Familiarity with emergency procedures,

Repeated practical drills,

Continuous experience accumulation.

👉 Only by turning emergency response into muscle memory can operators safeguard both production and safety.