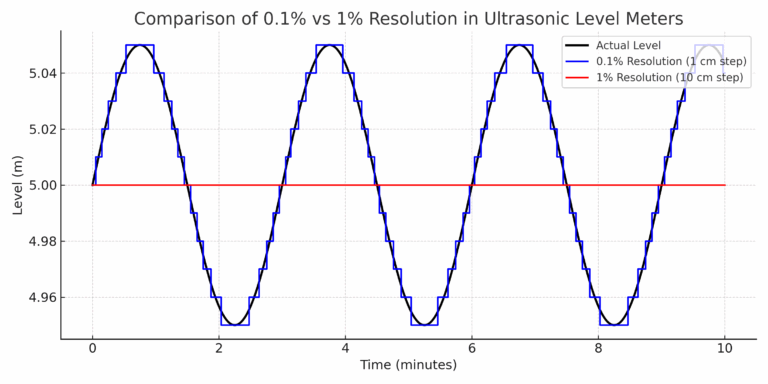

Resolution is one of the most overlooked specifications when selecting an ultrasonic level meter. Yet, it plays a critical role in determining how precisely the instrument can detect changes in liquid level.

In this article, we’ll explain what resolution really means, show practical examples, and help you decide whether 0.1% or 1% resolution is right for your application.

1. What Does “Resolution” Mean in a Level Meter?

Resolution is the smallest change in level that the meter can detect and display.

It is usually expressed as a percentage of the full measuring range.

Formula:

Minimum Detectable Change = Measuring Range × Resolution

For example, if the measuring range is 10 meters:

0.1% resolution → 10 m × 0.1% = 1 cm

1% resolution → 10 m × 1% = 10 cm

2. Practical Example

Let’s compare the two in a real-world scenario:

| Specification | 0.1% Resolution | 1% Resolution |

|---|---|---|

| Measuring Range | 10 m | 10 m |

| Minimum Detectable Change | 1 cm | 10 cm |

| Display Sensitivity | Updates for every 1 cm change | Updates only every 10 cm change |

| Data Granularity | Very fine detail | Coarser detail |

3. Where the Difference Really Matters

A. High-Precision Inventory and Batch Control

If you are managing expensive chemicals, fuel, or other high-value liquids, every centimeter counts.

A 0.1% resolution meter can track even the smallest changes, making it ideal for precise stock monitoring, batch dosing, and inventory reconciliation.

B. Process Automation

When your control logic depends on small level variations (e.g., pump start/stop with 2–3 cm hysteresis), a 1% resolution meter may be too coarse and cause delayed or inaccurate control actions.

C. Liquid Surfaces with Turbulence

High resolution is not always better. In tanks where the liquid surface fluctuates due to agitation or flow, a 0.1% meter may show constant small changes, requiring filtering or signal damping. In such cases, 1% resolution can provide a more stable reading.

D. General Monitoring

For simple applications like water towers, sewage pits, or irrigation tanks, where only general high/low levels matter, 1% resolution is more than adequate.

4. Cost Considerations

While 0.1% resolution meters are typically slightly more expensive than 1% models, the price difference is usually small compared to the potential benefits in accuracy, process efficiency, and inventory control.

5. Choosing the Right Resolution

Select 0.1% Resolution if:

You require precise measurement for high-value liquids.

You need accurate batch control or inventory tracking.

Small level changes matter to your process control.

Select 1% Resolution if:

Your application is for general monitoring.

Liquid level changes are large and gradual.

Turbulence or surface waves could cause unnecessary fluctuations in high-resolution readings.

Conclusion

The choice between 0.1% and 1% resolution is not about “better” or “worse” — it’s about matching the instrument to your application needs.

If your process demands fine measurement and precise control, go for 0.1%.

If you just need a reliable reading without unnecessary detail, 1% is a practical and cost-effective option.