In the world of industrial measurement and control, accuracy is more than just a number on a datasheet — it’s the foundation for safe, efficient, and reliable operations. Whether you’re calibrating a pressure transmitter, troubleshooting a flow meter, or preparing for a certification exam, understanding key error concepts will help you select, operate, and maintain instruments with confidence.

1. Accuracy vs. Accuracy Class

Accuracy refers to how close a measured value is to the true value. High accuracy means both systematic and random errors are minimal.

Accuracy class categorizes instruments based on their maximum permissible error under standard conditions. For example, if a pressure gauge has a maximum basic error of ±1.5% of its full scale, it belongs to Class 1.5, often marked inside a circle on its dial.

2. Choosing a Reference Instrument for Calibration

When using the direct comparison method — where the instrument under test is directly compared with a standard instrument — follow these rules:

Match the type – If calibrating a DC voltmeter, use a DC voltmeter as the standard.

Match the range – The standard’s range should be close to, but not significantly higher than, the test instrument’s range (typically within 25%).

Superior accuracy – The standard’s accuracy should be at least three times better than the instrument under test.

Example: If your pressure gauge is Class 1.0, the standard should be Class 0.3 or better.

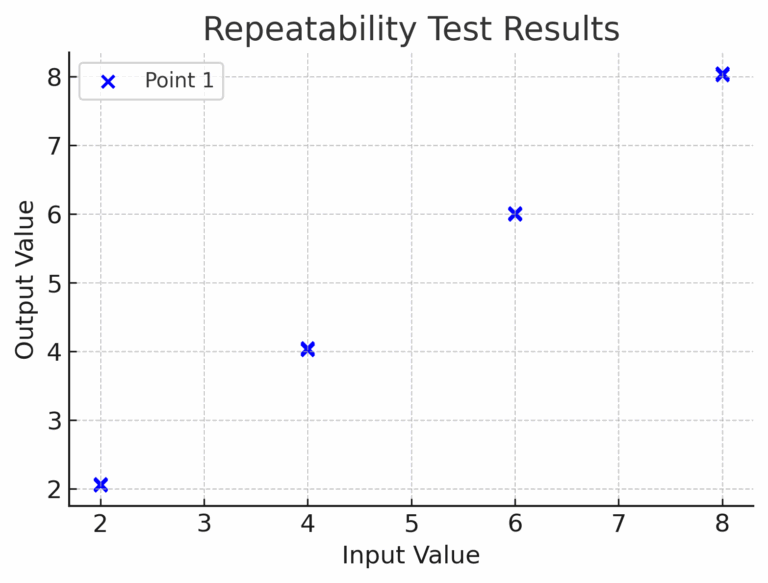

3. Repeatability vs. Reproducibility Errors

Repeatability error: The variation between multiple measurements of the same input, under the same conditions, in the same direction.

Reproducibility error: The maximum difference between measurements taken in opposite directions (increasing vs. decreasing input), under the same conditions.

This includes repeatability, hysteresis, dead zone, and drift.

Tip: Repeatability is a sign of stability, while reproducibility reflects how an instrument behaves in real-world scenarios where inputs may fluctuate in both directions.

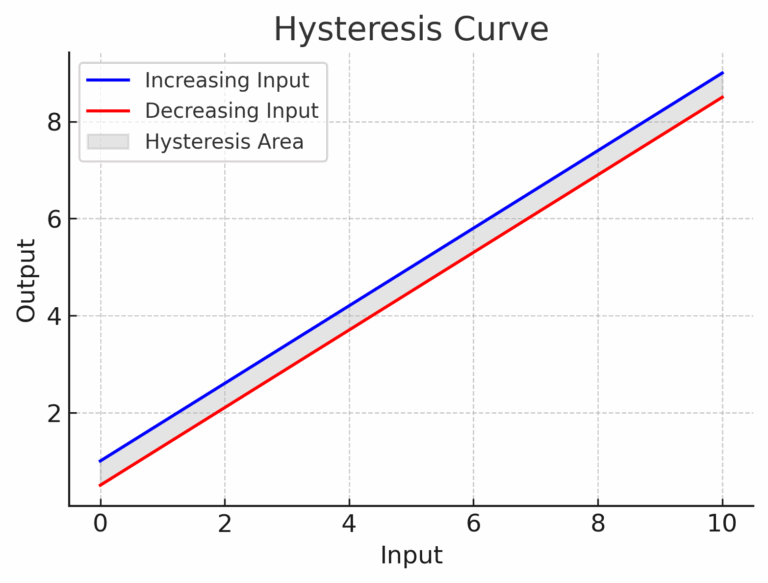

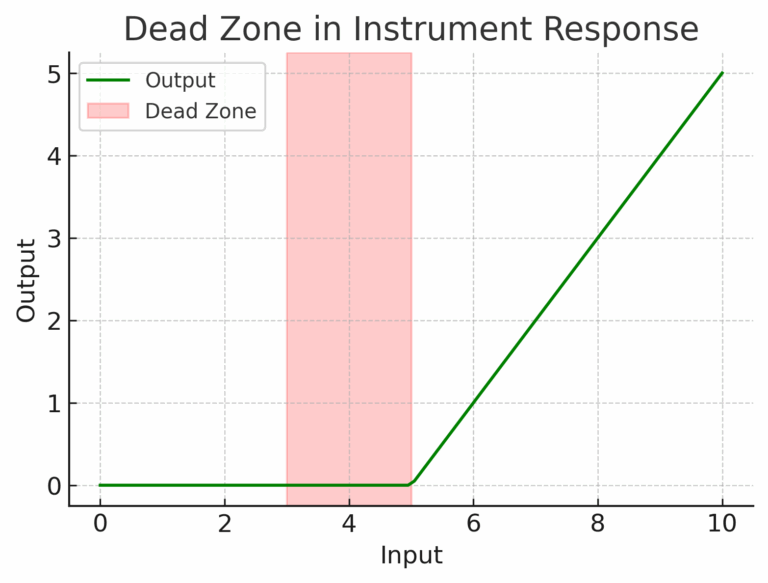

4. Hysteresis, Dead Zone, and Backlash

Hysteresis: The difference between readings when the input is increased versus decreased.

Dead zone: A range where changes in input do not cause any measurable output change.

Backlash: The total output difference for the same input value during increasing and decreasing input cycles — includes hysteresis and dead zone.

5. Why This Matters

Imagine calibrating a 1st class pressure transmitter (20–100 kPa range, ±0.8 kPa allowable error) with a standard gauge (0–600 kPa range, 0.35% accuracy). The standard gauge’s error is ±2.1 kPa — more than double the transmitter’s tolerance.

Result: This gauge cannot serve as a valid standard, and using it could lead to undetected measurement errors.

Conclusion

Understanding these error types isn’t just academic — it’s practical. It ensures:

Better instrument selection for the right job.

More reliable calibrations with proper standards.

Fewer costly mistakes caused by overlooked measurement uncertainties.

Next time you see “±0.5%” or “Class 1.5” on an instrument, you’ll know exactly what it means — and why it matters.